Click the category links for more…

Most Recent

Man Yao et al.

A general Transformer-based SNN architecture, termed as "Meta-SpikeFormer", whose goals are: (1) Lower-power, supports the spike-driven paradigm that there is only sparse addition in the network; (2) Versatility, handles various vision tasks; (3) High-performance, shows overwhelming performance advantages over CNN-based SNNs; (4) Meta-architecture, provides inspiration for future next-generation Transformer-based neuromorphic chip designs….

Gated Attention Coding for Training High-performance and Efficient Spiking Neural Networks

Xuerui Qiu et al.

A plug-and-play module that leverages the multi-dimensional gated attention unit to efficiently encode inputs into powerful representations before feeding them into the SNN architecture….

Neuromorphic Computing Guide

Michael Royal

A guide covering Neuromorphic Computing including the applications, libraries and tools that will make you better and more efficient with Neuromorphic Computing development….

NEUROTECH

NEUROTECH

Creators and leaders of the Neuromorphic Computing Technology community in Europe, by catalyzing research and collaboration….

Open Neuromorphic Collection

Open Neuromorphic

Open Neuromorphic (ONM) provides two things: (i) A curated list of software frameworks to make it easier for everyone to find the tool. (ii)A platform for your code. If you wish to create a new repository or migrate your existing code to ONM, please get in touch with us. You will be made a member of this organisation immediately….

Michael Royal

A guide covering Neuromorphic Computing including the applications, libraries and tools that will make you better and more efficient with Neuromorphic Computing development….

NEUROTECH

NEUROTECH

Creators and leaders of the Neuromorphic Computing Technology community in Europe, by catalyzing research and collaboration….

Open Neuromorphic Collection

Open Neuromorphic

Open Neuromorphic (ONM) provides two things: (i) A curated list of software frameworks to make it easier for everyone to find the tool. (ii)A platform for your code. If you wish to create a new repository or migrate your existing code to ONM, please get in touch with us. You will be made a member of this organisation immediately….

NatSGD

NatSGD: A Dataset with Speech, Gestures, and Demonstrations for Robot Learning in Natural Human-Robot Interaction

Recent advancements in multimodal Human-Robot Interaction (HRI) datasets have highlighted the fusion of speech and gesture, expanding robots’ capabilities to absorb explicit and implicit HRI insights. However, existing speech-gesture HRI datasets often focus on…

Tonic

Institute of Neuromorphic Engineering

A tool to facilitate the download, manipulation and loading of event-based/spike-based data. It's like PyTorch Vision but for neuromorphic data!…

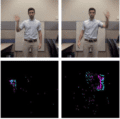

A Low Power, Fully Event-Based Gesture Recognition System

IBM Research

This dataset was used to build the real-time, gesture recognition system described in the CVPR 2017 paper titled “A Low Power, Fully Event-Based Gesture Recognition System.” The data was recorded using a DVS128. The dataset contains 11 hand gestures from 29 subjects under 3 illumination conditions and is released under a Creative Commons Attribution 4.0 license….

NatSGD: A Dataset with Speech, Gestures, and Demonstrations for Robot Learning in Natural Human-Robot Interaction

Recent advancements in multimodal Human-Robot Interaction (HRI) datasets have highlighted the fusion of speech and gesture, expanding robots’ capabilities to absorb explicit and implicit HRI insights. However, existing speech-gesture HRI datasets often focus on…

Tonic

Institute of Neuromorphic Engineering

A tool to facilitate the download, manipulation and loading of event-based/spike-based data. It's like PyTorch Vision but for neuromorphic data!…

A Low Power, Fully Event-Based Gesture Recognition System

IBM Research

This dataset was used to build the real-time, gesture recognition system described in the CVPR 2017 paper titled “A Low Power, Fully Event-Based Gesture Recognition System.” The data was recorded using a DVS128. The dataset contains 11 hand gestures from 29 subjects under 3 illumination conditions and is released under a Creative Commons Attribution 4.0 license….

Python Tutorial for Spiking Neural Network

Shikhar Gupta

This is a Python implementation of a hardware efficient spiking neural network. It includes modified learning and prediction rules which could be realised on hardware in an energy efficient way. The aim is to develop a network which could be used for on-chip learning and prediction….

Neuromatch Academy Tutorials

Neuromatch Academy

We have curated a curriculum that spans most areas of computational neuroscience (a hard task in an increasingly big field!). We will expose you to both theoretical modeling and more data-driven analyses. This section will overview the curriculum….

Shikhar Gupta

This is a Python implementation of a hardware efficient spiking neural network. It includes modified learning and prediction rules which could be realised on hardware in an energy efficient way. The aim is to develop a network which could be used for on-chip learning and prediction….

Neuromatch Academy Tutorials

Neuromatch Academy

We have curated a curriculum that spans most areas of computational neuroscience (a hard task in an increasingly big field!). We will expose you to both theoretical modeling and more data-driven analyses. This section will overview the curriculum….

Spike-driven Transformer V2

Man Yao et al.

A general Transformer-based SNN architecture, termed as "Meta-SpikeFormer", whose goals are: (1) Lower-power, supports the spike-driven paradigm that there is only sparse addition in the network; (2) Versatility, handles various vision tasks; (3) High-performance, shows overwhelming performance advantages over CNN-based SNNs; (4) Meta-architecture, provides inspiration for future next-generation Transformer-based neuromorphic chip designs….

Beam-Prop

Alexandros Pitilakis and others

Beam Propagation Method (BPM) for planar photonic integrated circuits (PIC), implemented in MATLAB with finite-differences….

A Complete Neuromorphic Solution to Outdoor Navigation and Path Planning

Tiffany Hwu and others

A complete neuromorphic solution to outdoor navigation and path planning. The proposed solution is based on a spiking neural network that mimics the biological nervous system of insects. The solution is tested in a real-world scenario and demonstrates promising results in terms of accuracy and efficiency….

Man Yao et al.

A general Transformer-based SNN architecture, termed as "Meta-SpikeFormer", whose goals are: (1) Lower-power, supports the spike-driven paradigm that there is only sparse addition in the network; (2) Versatility, handles various vision tasks; (3) High-performance, shows overwhelming performance advantages over CNN-based SNNs; (4) Meta-architecture, provides inspiration for future next-generation Transformer-based neuromorphic chip designs….

Beam-Prop

Alexandros Pitilakis and others

Beam Propagation Method (BPM) for planar photonic integrated circuits (PIC), implemented in MATLAB with finite-differences….

A Complete Neuromorphic Solution to Outdoor Navigation and Path Planning

Tiffany Hwu and others

A complete neuromorphic solution to outdoor navigation and path planning. The proposed solution is based on a spiking neural network that mimics the biological nervous system of insects. The solution is tested in a real-world scenario and demonstrates promising results in terms of accuracy and efficiency….

Spiking Oculomotor Network for Robotic Head Control

Loannis Polykretis and others

This package is the Python and ROS implementation of a spiking neural network on Intel's Loihi neuromorphic processor mimicking the oculomotor system to control a biomimetic robotic head….

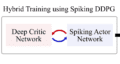

Spiking Neural Network for Mapless Navigation

Guangzhi Tang and others

A PyTorch implementation of the Spiking Deep Deterministic Policy Gradient (SDDPG) framework. The hybrid framework trains a spiking neural network (SNN) for energy-efficient mapless navigation on Intel's Loihi neuromorphic processor….

Loannis Polykretis and others

This package is the Python and ROS implementation of a spiking neural network on Intel's Loihi neuromorphic processor mimicking the oculomotor system to control a biomimetic robotic head….

Spiking Neural Network for Mapless Navigation

Guangzhi Tang and others

A PyTorch implementation of the Spiking Deep Deterministic Policy Gradient (SDDPG) framework. The hybrid framework trains a spiking neural network (SNN) for energy-efficient mapless navigation on Intel's Loihi neuromorphic processor….

pyNAVIS: an open-source cross-platform Neuromorphic Auditory VISualizer

Juan Pedro Dominguez-Morales

An open-source cross-platform Python module for analyzing and processing spiking information obtained from neuromorphic auditory sensors. It is primarily focused to be used with a NAS, but can work with any other cochlea sensor….

Xylo Audio HDK

Synsense

A low-power digital SNN inference chip, including a dedicated audio interface. The Xylo-Audio HDK includes an on-board microphone and direct analog audio input for audio processing applications….

Dynamic Audio Sensor

iniLabs

An asynchronous event-based silicon cochlea. The board takes stereo audio inputs; the custom chip asynchronously outputs a stream of address-events representing activity in different frequency ranges. As such it is a silicon model of the cochlea, the auditory inner ear….

Juan Pedro Dominguez-Morales

An open-source cross-platform Python module for analyzing and processing spiking information obtained from neuromorphic auditory sensors. It is primarily focused to be used with a NAS, but can work with any other cochlea sensor….

Xylo Audio HDK

Synsense

A low-power digital SNN inference chip, including a dedicated audio interface. The Xylo-Audio HDK includes an on-board microphone and direct analog audio input for audio processing applications….

Dynamic Audio Sensor

iniLabs

An asynchronous event-based silicon cochlea. The board takes stereo audio inputs; the custom chip asynchronously outputs a stream of address-events representing activity in different frequency ranges. As such it is a silicon model of the cochlea, the auditory inner ear….

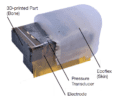

Artificial Robot Skin

Institute for Cognitive Systems, Technical University of Munich

A multimodal tactile-sensing module for humanoid robots. By integrating various sensing technologies, this module enables robots to perceive complex tactile information such as force, pressure, temperature, and texture….

Event-Driven Visual-Tactile Sensing and Learning for Robots

Tasbolat Taunyazov

NeuTouch is a neuromorphic fingertip tactile sensor that scales well with the number of taxels. The proposed Visual-Tactile Spiking Neural Network (VT-SNN) also enables fast perception when coupled with event sensors. The proposed visual-tactile system (using the NeuTouch and Prophesee event camera) is evaluated on two robot tasks: container classification and rotational slip detection….

Skin-Inspired Flexible and Stretchable Electrospun Carbon Nanofiber Sensors for Neuromorphic Sensing

Debarun Sengupta and others

An approach entailing carbon nanofiber–polydimethylsiloxane composite-based piezoresistive sensors, coupled with spiking neural networks, to mimic skin-like sensing….

Institute for Cognitive Systems, Technical University of Munich

A multimodal tactile-sensing module for humanoid robots. By integrating various sensing technologies, this module enables robots to perceive complex tactile information such as force, pressure, temperature, and texture….

Event-Driven Visual-Tactile Sensing and Learning for Robots

Tasbolat Taunyazov

NeuTouch is a neuromorphic fingertip tactile sensor that scales well with the number of taxels. The proposed Visual-Tactile Spiking Neural Network (VT-SNN) also enables fast perception when coupled with event sensors. The proposed visual-tactile system (using the NeuTouch and Prophesee event camera) is evaluated on two robot tasks: container classification and rotational slip detection….

Skin-Inspired Flexible and Stretchable Electrospun Carbon Nanofiber Sensors for Neuromorphic Sensing

Debarun Sengupta and others

An approach entailing carbon nanofiber–polydimethylsiloxane composite-based piezoresistive sensors, coupled with spiking neural networks, to mimic skin-like sensing….

Speck smart vision sensor

Synsense

Speck is a neuromorphic vision system-on-chip, combining an event-based vision sensor with spiking CNN cores for inference on vision tasks. The Speck HDK includes an interchangeable lens, and full resources for developing neuromorphic vision applications….

Metavision and the IMX636ES Sensor

Sony and Prophesee

Metavision Sensing and Software offer all the resources to work with event-driven cameras. The sensational IMX636ES is the new event-driven sensor created by a collaboration between Sony and PROPHESEE. The Metavision software offers 95 algorithms, 67 code samples and 11 ready-to-use applications to be used with this new generation of cameras….

DVXplorer Series

Inivation AG

A high-resolution event-based sensors that only output event streams. Compared to the DAVIS series, it can provide (640 x 480) resolution, but is not able to output greyscale images….

Synsense

Speck is a neuromorphic vision system-on-chip, combining an event-based vision sensor with spiking CNN cores for inference on vision tasks. The Speck HDK includes an interchangeable lens, and full resources for developing neuromorphic vision applications….

Metavision and the IMX636ES Sensor

Sony and Prophesee

Metavision Sensing and Software offer all the resources to work with event-driven cameras. The sensational IMX636ES is the new event-driven sensor created by a collaboration between Sony and PROPHESEE. The Metavision software offers 95 algorithms, 67 code samples and 11 ready-to-use applications to be used with this new generation of cameras….

DVXplorer Series

Inivation AG

A high-resolution event-based sensors that only output event streams. Compared to the DAVIS series, it can provide (640 x 480) resolution, but is not able to output greyscale images….

NxTF: An API and Compiler for Deep Spiking Neural Networks on Intel Loihi

Bodo Rueckauer and others

An open-source software platform for compiling and running deep spiking neural networks (SNNs) on the Intel Loihi neuromorphic hardware platform. The paper introduces the NxTF API, which provides a simple interface for defining and training SNNs using common deep learning frameworks, and the NxTF compiler, which translates trained SNN models into executable code for the Loihi chip….

NeuroKit2

Nanyang Technological University

A user-friendly package providing easy access to advanced biosignal processing routines. Researchers and clinicians without extensive knowledge of programming or biomedical signal processing can analyze physiological data with only two lines of code….

Telluride Decoding Toolbox

Telluride Engineering Workshop Participants – Institute of Neuromorphic Engineering

A set of tools that allow users to decode brain signals into the signals that generated them. It can determine whether the signals come from visual or auditory stimuli, and whether they are measured with EEG, MEG, ECoG or any other neural response for decoding. This toolbox is provided as Matlab and Python code, along with documentation and some sample EEG and MEG data….

Bodo Rueckauer and others

An open-source software platform for compiling and running deep spiking neural networks (SNNs) on the Intel Loihi neuromorphic hardware platform. The paper introduces the NxTF API, which provides a simple interface for defining and training SNNs using common deep learning frameworks, and the NxTF compiler, which translates trained SNN models into executable code for the Loihi chip….

NeuroKit2

Nanyang Technological University

A user-friendly package providing easy access to advanced biosignal processing routines. Researchers and clinicians without extensive knowledge of programming or biomedical signal processing can analyze physiological data with only two lines of code….

Telluride Decoding Toolbox

Telluride Engineering Workshop Participants – Institute of Neuromorphic Engineering

A set of tools that allow users to decode brain signals into the signals that generated them. It can determine whether the signals come from visual or auditory stimuli, and whether they are measured with EEG, MEG, ECoG or any other neural response for decoding. This toolbox is provided as Matlab and Python code, along with documentation and some sample EEG and MEG data….

Gated Attention Coding for Training High-performance and Efficient Spiking Neural Networks

Xuerui Qiu et al.

A plug-and-play module that leverages the multi-dimensional gated attention unit to efficiently encode inputs into powerful representations before feeding them into the SNN architecture….

pyNAVIS: an open-source cross-platform Neuromorphic Auditory VISualizer

Juan Pedro Dominguez-Morales

An open-source cross-platform Python module for analyzing and processing spiking information obtained from neuromorphic auditory sensors. It is primarily focused to be used with a NAS, but can work with any other cochlea sensor….

PyAER

Yuhuang Hu

Low-level Python APIs for Accessing Neuromorphic Devices. A combination of a Pythonic libcaer and a light-weight "ROS". PyAER serves as an agile package that focus on fast development and extensibility….

Xuerui Qiu et al.

A plug-and-play module that leverages the multi-dimensional gated attention unit to efficiently encode inputs into powerful representations before feeding them into the SNN architecture….

pyNAVIS: an open-source cross-platform Neuromorphic Auditory VISualizer

Juan Pedro Dominguez-Morales

An open-source cross-platform Python module for analyzing and processing spiking information obtained from neuromorphic auditory sensors. It is primarily focused to be used with a NAS, but can work with any other cochlea sensor….

PyAER

Yuhuang Hu

Low-level Python APIs for Accessing Neuromorphic Devices. A combination of a Pythonic libcaer and a light-weight "ROS". PyAER serves as an agile package that focus on fast development and extensibility….